Testing with pytest. Unit, Integration and Smoke tests.

Levon Davtyan - 1st year CS

Introduction

Errors are every programmer's nightmare. Those red blobs of cryptic text appear in the terminal even when you are certain that you made no mistakes. They are inevitable yet manageable; the way to manage and identify them is through tests. As systems grow in complexity, testing becomes more than simply trying something to see if it works or not. Tests are pieces of code that check whether other pieces of code work as intended. I know, it sounds painful, but this is what we signed up for when choosing computer science. Since it's too late to drop out now, let's delve into testing and understand how to do it best.

Basics of testing

The purpose of testing is clear - it is to ensure that your code is not broken. However, there are different methods of achieving this seemingly easy task. Below are the main methods of testing based on my experience:

Unit tests: These are low-level and fast running tests that check the functionality of individual components of your application, such as specific functions, classes, or modules.

Integration tests: These tests verify whether the different components of the application are connected and interact in an expected manner. It ensures that all the units used in unit testing still function properly when connected to one another. For example, it checks whether data flows from one function to another as expected.

Smoke tests: These tests ensure that the core features of your program work as expected. They are less comprehensive than integration tests but still ensure that the main functionality of the program is intact. It is common to run smoke tests before integration tests as they are meant to be faster and more general.

The purpose and use of each method will become clearer once I provide you with some code examples.

It's important to understand that these testing methodologies are not used separately, but in conjunction. In a real-world software application, it is rare to see only unit tests or only integration tests. The advantage of writing tests like this is that it automates the integration process. So, when you add new features or make changes to your system, you can catch any potential errors by running the smoke tests you've previously defined. It's a quick check to ensure everything is working as expected and nothing is broken. Running all the tests is usually part of the CI/CD pipeline. This means that when a programmer submits a pull request, all the tests are run. Only if everything passes, the code can be merged with the main codebase of the company.

Pytest

If you, like me, have had the fortune of being taught by the legendary Mark Handley, you might have watched one of his videos that touched on writing tests using pytest. In this post, I want to dive deeper and show you how pytest is conventionally used in the world of software engineering. I will use it to test a basic example API (created with FastAPI) to emulate the everyday struggles of a backend engineer.

Pytest is a Python package that provides programmers with the tools they need to simplify and automate testing. It is very easy to get started with, yet powerful enough for complex testing of larger systems. Before using it make sure you install it by running pip install -U pytest in the terminal.

Preliminary syntax explanation:

assert- this keyword checks a boolean condition. If the condition evaluates toFalsethen an assertion error is raised and pytest marks the test as failed. If the boolean evaluates toTruethen the test has passed. For example:assert 2 + 3 == 4would raise an assertion error and fail the test whereasassert 2 + 3 == 5wouldn’t. If a given test function terminates without raising an error then that test has passed.Decorators - you will see some code with @ symbol that comes before our test definition. The @ sign is followed by a name of a function which takes the function below it (in our case, the test) as a parameter and adds extra functionality to it. You don’t have to worry about this too much at the moment but if you wish to find out more I recommend this article.

Here are some of pytest’s features:

Auto-discovery: This means that pytest will search for all files with the name guideline

test_{name}.pyor{name}_test.py. Within those files it will execute all functions withtestprefixed in their name, if these functions are in a class then the class should haveTestprefixed in its name. These are our test functions. You can also edit the pytest.ini file to add custom discoverable names like so:[pytest] python_files = check_*.py python_classes = Check python_functions = *_checkRunning the tests: You can run the tests by simply executing

pytestin your terminal. Pytest will then search your current directory for any tests that follow the naming conventions mentioned above and execute them. You can runpytest -vwhich will put pytest in verbose mode meaning that it will print out each test being run in a separate line. If you want to only run one file you can specify that by runningpytest -v test_file.pyor if you only want one function you can runpytest -v test_file.py::test_functionParameterisation: This allows to run the same test multiple times with different values. This is usually used to test a particular feature with various inputs without having to write a separate test for each input scenario.

- Example:

import pytest

def add(a, b):

return a + b

# runs the test three times with the arguments

# (1, 2, 3), (2, 3, 5) and (10, 20, 30)

@pytest.mark.parametrize(

"a, b, expected", [(1, 2, 3), (2, 3, 5), (10, 20, 30)]

)

def test_add(a, b, expected):

assert add(a, b) == expected

Fixtures: Fixture is a type of function that runs before all the test functions. It is used in order to complete any setup steps that are the same across multiple tests. This way, you only write the code for the setup once and can reuse it in every test that needs it; it reduces repetitiveness.

- Example:

@pytest.fixture

def input_user():

# creates a user instance from a predefined class

user = User(id=1, name="Mark")

# uses a predefined function

add_user_to_database(user)

yield user # pauses the fixture to run the test function

remove_user_from_database(user) # uses a predefined function

def test_user_id(input_user):

assert test_user.id == 1

def test_user_name(input_user):

assert test_user.name == "Mark"

This may appear slightly more complex, but essentially what we're doing is creating a user and adding it to our hypothetical database. Before removing the user from the database (to avoid cluttering it with a bunch of test users), we yield it, meaning we return it without terminating the function. This user is used in our test functions. Once the test functions finish running and the user is no longer needed, it is removed from the database. We wrote this setup code once and can now test this example user in as many tests as we want without having to rewrite it for each one.

Testing a simple application

Now that we are familiar with pytest, we can begin examining examples of each testing method that I mentioned earlier. Here is a basic FastAPI setup for a simplified twitter clone API with an SQL database.

Here is the application code in a main.py file

from fastapi import FastAPI, Depends, HTTPException

from sqlalchemy import create_engine, Column, Integer, String, ForeignKey, DateTime

from sqlalchemy.sql import func

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy.orm import sessionmaker, Session, relationship

# Database setup

SQLALCHEMY_DATABASE_URL = "sqlite:///./test.db"

engine = create_engine(SQLALCHEMY_DATABASE_URL, connect_args={"check_same_thread": False})

SessionLocal = sessionmaker(autocommit=False, autoflush=False, bind=engine)

Base = declarative_base()

# Database model definitions

class Tweet(Base):

__tablename__ = "tweets"

id = Column(Integer, primary_key=True, index=True)

content = Column(String, index=True)

timestamp = Column(DateTime(timezone=True), server_default=func.now())

user_id = Column(Integer, ForeignKey("users.id"))

author = relationship("User", back_populates="tweets")

class User(Base):

__tablename__ = "users"

id = Column(Integer, primary_key=True, index=True)

username = Column(String, unique=True, index=True)

tweets = relationship("Tweet", back_populates="author")

# Database dependency

def get_db():

db = SessionLocal()

try:

yield db

finally:

db.close()

# FastAPI app

app = FastAPI()

# Create the database tables

Base.metadata.create_all(bind=engine)

# Routes

@app.post("/users/")

def create_user(username: str, db: Session = Depends(get_db)):

db_user = User(username=username)

db.add(db_user)

db.commit()

db.refresh(db_user)

return db_user

@app.post("/tweets/")

def create_tweet(user_id: int, content: str, db: Session = Depends(get_db)):

db_tweet = Tweet(user_id=user_id, content=content)

db.add(db_tweet)

db.commit()

db.refresh(db_tweet)

return db_tweet

@app.get("/tweets/{user_id}")

def read_tweets(user_id: int, db: Session = Depends(get_db)):

tweets = db.query(Tweet).filter(Tweet.user_id == user_id).all()

if not tweets:

raise HTTPException(status_code=404, detail="User not found or no tweets")

return tweets

If you haven’t encountered APIs before then this might be pretty hefty piece of code. Essentially what we’ve done is setup our sql database using the sqlalchemy module, then defined the database tables and their properties (e.g. the users table will have id, username and tweets columns) and then setup the API endpoints that perform a certain functionality on our database. For the purposes of this blog post we have only added features to create a user and add/read tweets.

If you don’t understand everything in the code above then don’t worry too much as it goes slightly beyond the purposes of this post. I will probably cover all this in a separate article. In the meantime, if you wish to find out more you can explore the following resources:

Okay now that our basic application is complete, we can start writing our tests.

Unit tests

Remember that by definition unit tests only check the behaviour of individual components, they are atomic and minimal. We have to think of unit tests that only check one specific thing in the context of our application. I suggest that we write one unit test for adding a user to our database and another unit test for deleting a user.

To do this I will have to initialise the database in both of my unit test functions. This is a perfect opportunity to use a pytest fixture. We will write the boilerplate code for initialising the database inside the fixture and use it in both unit tests. Conventionally the fixtures are written in a separate conftest.py file which pytest recognises during the execution. Here are the fixtures (note how we can use one fixture inside another by passing it as a parameter):

import pytest

from sqlalchemy import create_engine

from sqlalchemy.orm import sessionmaker

from main import Base

# Database URL for testing

SQLALCHEMY_DATABASE_URL = "sqlite:///./test.db"

# Fixture to create a database engine

@pytest.fixture(scope="session")

def engine():

return create_engine(SQLALCHEMY_DATABASE_URL, connect_args={"check_same_thread": False})

# Fixture to create the database tables

@pytest.fixture(scope="session")

def setup_database(engine):

Base.metadata.create_all(bind=engine)

yield

Base.metadata.drop_all(bind=engine)

# Fixture for creating a new session for each test

@pytest.fixture(scope="function")

def db_session(engine, setup_database):

connection = engine.connect()

transaction = connection.begin()

session = sessionmaker(bind=connection)()

yield session

session.close()

connection.close()

transaction.rollback()

Now that this tedious step is over, we can write clean unit tests code in a separate test_unit.py file like so:

import pytest

from main import User

# uses the db_session fixture inside conftest.py

def test_add_user(db_session):

# Create a new user instance

new_user = User(username="testuser")

db_session.add(new_user)

db_session.commit()

# Query the user back

user = db_session.query(User).filter_by(username="testuser").first()

assert user is not None

assert user.username == "testuser"

# uses the db_session fixture inside conftest.py

def test_delete_user(db_session):

# Add a user, then delete it

user = User(username="testuser2")

db_session.add(user)

db_session.commit()

# Delete the user

db_session.delete(user)

db_session.commit()

# Query the user back

deleted_user = db_session.query(User).filter_by(username="testuser2").first()

assert deleted_user is None

In the test_add_user function we create the user from our User model, add it to the database and commit the change. We then query the database to see if the user we just created exists or not. If the database returns None our assert will fail and so will the test. We then assert to check the username just in case (e.g. there could be an edge case where our query didn’t work as expected so the user was not None but wasn’t the correct one either).

In the test_delete_user we add the user, delete it and then query the database to ensure that the user is no longer there by asserting None.

It might seem pointless to write separate tests for tiny operations like this, but the whole point of unit tests is that in case of a failure the programmer knows exactly what’s wrong with their system. When multiple jobs are done in one function it is difficult to spot what exactly went wrong, whereas with unit tests you immediately know which part of your application malfunctioned.

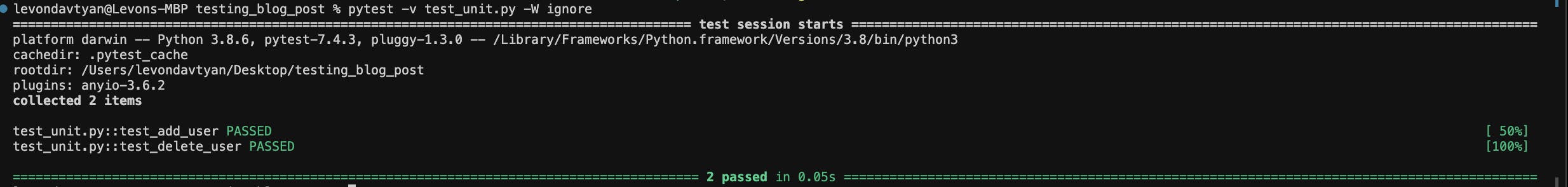

Let’s run our unit test and see if they work as expected.

There is nothing more satisfying in this life than seeing that all your tests have passed (or maybe I just have a sad life).

Note: the -W ignore argument at the end just tells pytest to not print any warnings.

Now let’s see what an erroneous test would look like by changing the initial username in our test_add_user function:

Not so pretty anymore. This is what you can expect to see in your terminal when your tests fail. Here, an assertion error was raised since the user variable had the value None. This was because the sql query returned None since the username of our query didn’t match the username of the user we added at the start of the test.

After reverting back to the working tests, we can now confirm that adding and removing users from our database works as expected, time to move to integration testing.

Integration tests

We have to start testing the connection and data flows between the different components of our application. So, let’s test the API and the database in unison. The plan is to add two tests, one that makes a post request to the /users endpoint and another to /tweets endpoint. In the case of a successful API request, we will check whether our database became populated with the new user and tweet in correspondence with the API call. These tests will ensure that our API and database interact in the expected manner.

For this example, I will use parameterisation in order to test multiple users and tweets at once.

Let’s add the tests in test_integration.py:

import pytest

from fastapi.testclient import TestClient

from main import app, get_db, User, Tweet

# used to make a test client to interact with the api

@pytest.fixture

def client(db_session):

def override_get_db():

try:

yield db_session

finally:

pass

app.dependency_overrides[get_db] = override_get_db

return TestClient(app)

@pytest.mark.parametrize("username", ["user1", "user2", "user3"])

def test_create_user(client, db_session, username):

# makes a POST request to the /users/ endpoint

response = client.post(f"/users/?username={username}")

# checks that the api request was successful

assert response.status_code == 200

# checks that username returned by the api matches the one we sent

assert response.json()["username"] == username

# checks that the user was succesfully added to the database

assert db_session.query(User).filter_by(username=username).first()

@pytest.mark.parametrize("content", ["Hello World", "Goodbye World"])

def test_create_tweet(client, db_session, content):

# create the user to associate the tweet with

user = User(username="testuser")

db_session.add(user)

db_session.commit()

# makes a POST request to the /tweets/ endpoint

response = client.post(f"/tweets/?user_id={user.id}&content={content}")

# checks that the api request was successful

assert response.status_code == 200

# checks the content returned by the api matches the one we sent

assert response.json()["content"] == content

# checks that the tweet was succesfully added to the database

assert db_session.query(Tweet).filter_by(content=content).first()

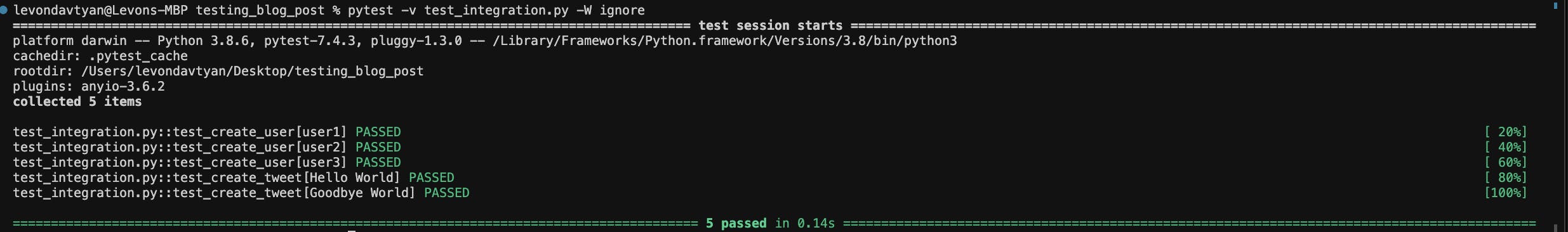

Let’s run the tests and see if they work.

Notice how it prints out the result for each parameter passed in through parameterisation? This is very useful to check which input values failed and identify edge cases that aren’t accounted for in your program.

Now that all our integration tests have passed we know that our API properly interacts with our database.

Smoke tests

Due to the simplicity of this program there aren’t that many smoke tests that we can or should write. I suppose we could write a very basic one like so:

from fastapi.testclient import TestClient

from main import app

client = TestClient(app)

def test_read_main():

response = client.get("/")

# checks if the api call was successful

assert response.status_code == 200

This smoke test ensures that our fastapi application is up and running as expected. There isn’t any point to this as our app is not that complex and if there is an error it would be spotted in the integration tests anyways. However, the idea is that this is the first thing we should test after making a change. In more complex systems, there are hundreds more integration tests that will take much longer time. Before running those we should run our smoke tests (in this case we only have one) to see if it is even worth checking the integration tests. If our smoke test fail that means that our system is fundamentally broken and the integration tests will fail too, so there is no point in wasting our time trying to run them. For example, if our smoke test here fails that means our API is not running properly, hence all our integration tests will fail too.

Summary

As demonstrated above, pytest and automated testing is a very powerful tool that can save you hours of debugging. Although it is more useful in larger software applications, I highly encourage you to start using the above methods in your personal projects and even uni assignments. Writing tests is a great skill to master that is highly valuable in the industry, hence it is better to start early and develop healthy programming habits.

PS: About UCL Devs Blog

UCL Devs Blog is a platform where any developer studying at UCL can share a technical blog post. The goal is to give all students an opportunity to write about anything that they are working on or found interesting and would like to share with everyone else. If you wish to write a post then feel free to DM the instagram page @ucldevs or @levdavtian. The posts can be about absolutely anything coding related, in any format that the writer prefers. All the blog posts will be publicly available to read so the students can add it to their CVs/personal websites. It is highly valued by employers and recruiters so make sure to make good use of it! As of now all the posts will be published on hashnode, however there are plans of creating a separate website in the case of a lot of traction.